Assessing research impact: Perspectives from a provincial health research funding agency

17 October 2018

Assessing research impact is complex and challenging, but essential for understanding the link between research funding investments and outcomes both within and beyond academia.

Here Dr. Julia Langton, MSFHR’s former manager, evaluation & impact analysis, provides an overview of how we approach research impact assessment at the Foundation, and some of the tools in our assessment toolbox.

Forward Thinking is MSFHR’s blog, focusing on what it takes to be a responsive and responsible research funder.

Assessing research impact: Perspectives from a provincial health research funding agency

When people ask me what I do for work, I tell them that I do research on research (in other words, I assess the impact of research). Research impact assessment is a complex and rapidly evolving field that is fraught with challenges and can be approached from many angles depending on your vantage point (e.g., researcher, university, funder, government). So where do we start?

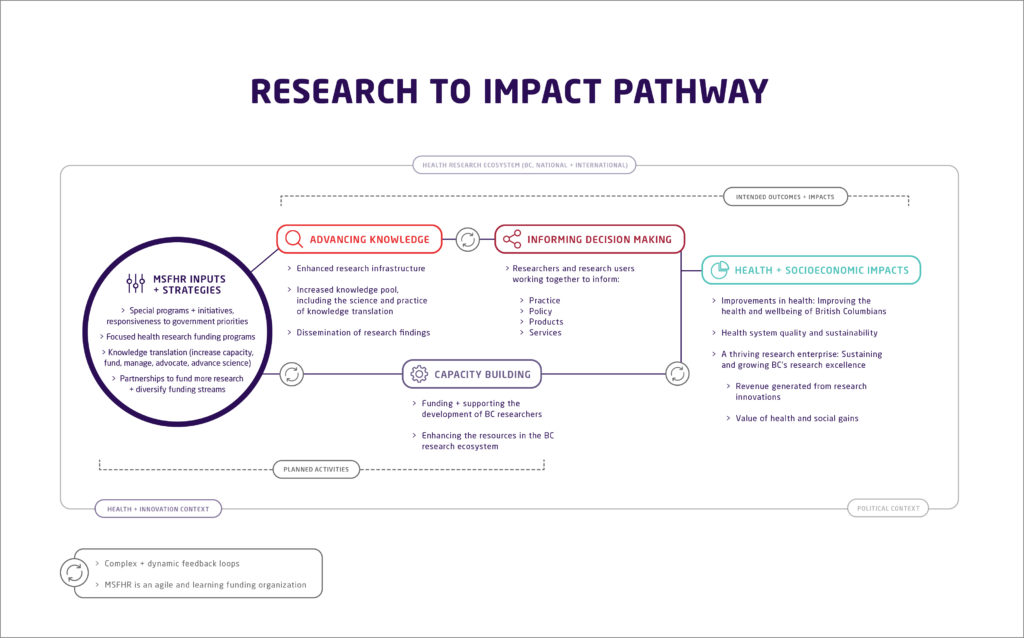

As BC’s health research funder, we use research impact assessment practices to understand the link between research funding and outcomes and impacts both within and beyond academia. For example, we examine the ways in which research builds provincial research capacity and advances knowledge that is high quality and accessible so it has the best chance of influencing health policy and practice, and ultimately improve health outcomes for British Columbians. This is no easy feat, but luckily we aren’t the only ones looking to understand the link between research and impact.

In this blog we outline some of the tools in our toolbox as well as some useful tips for approaching research impact assessment.

Our research impact assessment toolbox

There are dozens of conceptual frameworks and approaches to research impact assessment across the globe, which can be intimidating for someone new to the field (if you’re interested, this review by Greenhalgh and colleagues, and the London School of Economics’ blog are good places to start). But the good news is that, for the most part, frameworks are more similar than they are different in their quest to understand research impact.

In Canada, we are lucky to have a thriving community of practice made up of provincial and national health funders and research organizations, that has come together to move the research impact agenda forward. By using and adapting the Canadian Academy of Health Sciences (CAHS) framework for impact assessment to local environments, we benefit from a common language for evaluating and communicating about the impact of investments in health research. The CAHS framework uses five impact categories: advancing knowledge, capacity building, informing decision-making, health impacts, and socio-economic impacts, and provides a menu of nearly 70 indicators that map onto these domains. At MSFHR we have adapted the CAHS framework to our local context. This framework forms the heart of our organizational evaluation and impact analysis strategy and shapes our approach to understanding the impact of our investments in health research.

Drawing on national and international resources and organizations (such as ISRIA – International School on Research Impact Assessment) also helps cut through contextual differences and provide broad guidance for effective research impact assessment.

Over the summer, we added an exciting new tool to our research impact toolkit with the release of the Canadian Health Services and Policy Research Alliance (CSHRPA) whitepaper that provides a deep dive into how to assess the impact of health research on decision-making.

This new framework, developed collaboratively by the Canadian health research community, builds on foundations established by the CAHS framework nearly a decade ago. MSFHR is one of many health research organizations that will be a test site for implementation of this framework. As part of this, we are currently developing a research impact assessment plan that will focus on evaluating our programs and initiatives that inform health sector planning and decision-making and address BC’s health system priorities.

Assessing research impact – where do we start?

We know that assessing research impact is complex, and that different approaches are appropriate in different circumstances. Based on what we have learned at MSFHR as we continue to grow our capacity in this area, and my experience in the field, I’ve outlined three tips for research impact assessment. Interestingly, these are not technical (i.e., it’s not a list of indicators or a performance measurement framework), but instead they highlight the importance of how you approach evaluation and research impact assessment.

- When it comes to measurement, don’t oversimplify with one measure or an overreliance on quantitative data.

In short, there is no magic bullet. As an evaluator you are often asked “how do we know we are using the RIGHT measure?” or “what is our return on investment?”. Unfortunately there is no one right measure and return on investment in health research is a complex multi-dimensional concept.When it comes to measurement, it is important to select a group of measures that provide the best possible picture of what you are trying to capture, and to combine these numbers with narrative or qualitative data that reflects the views of different stakeholders. At MSFHR, we pull together information on our application numbers and success rates as well as peer reviewer and award holder feedback to understand how our programs are performing. One measure might be clean and tidy, but standalone measures rarely give a fair or complete picture of the issue you are trying to understand. - Build capacity to use data within your organization.

No matter how good your evaluation team (or person) they cannot understand the full impact of your organization without the help of others. At MSFHR, we firmly believe that evaluation and understanding research impact is everyone’s business.The best example of this is our program learning and improvement cycle that involves pulling data together that represents the views of multiple stakeholders and discussing findings with staff from across the organization, each with different vantage points and interactions with the research community. Undoubtedly, the most valuable part of this process is not the data or information itself but the discussions about what these data mean from different perspectives. It is these discussions that have enabled us to connect data insights with actions to improve our suite of funding programs (one of the hallmarks of a data centric organization).It’s important to note that we didn’t get here overnight and we are still working to improve this process. The success of our program learning and improvement cycle is partly due to the capacity building work we’ve done to build a data culture. I’ll admit that “data culture” is a bit of a buzz word, but it is crucial. In a nutshell it means that leadership prioritizes and invests in data collection, knowledge production and strategic use of that data across the whole organization, and staff are encouraged and supported to access and use that data in their day-to-day work.Our involvement in a data culture project led by researchers at Emerson College and the MIT Centre for Civic Media in the US really helped us kick start this work at MSFHR. - Join (or start) a community of practice.

I’ve already mentioned the benefits of the CAHS framework in providing provincial and national health funders with a common language and methodological approaches, but our community of practice extends beyond this.We have rich and productive collaborations around research impact through an Impact Analysis Group, established in 2006 as part of the National Alliance of Provincial Health Research Funding Organizations (NAPHRO). The goal of the group is to share and apply best practices in health research impact assessment from the perspective of provincial health research funders. For example, we have developed harmonized data standards and indicator specifications that have enabled members to advance research impact assessment practices and understand the collective impact of provincial health research funding organizations. This community also functions as a way to share resources, challenges, learnings and opportunities, and simply bounce ideas around before implementing them locally.

The challenges inherent in research impact assessment are well known and we certainly don’t have all of the answers. It will take time and resources to fully understand, for example, the link between research funding and changes in policy and practice. We know it can take years, and in some cases decades, for impacts to emerge, and that the influence of research on policy and practice is more often diffuse and protracted rather than direct and instrumental. But if we want to move the needle on these tough questions, we need to be careful not to oversimplify, to build a data culture within our organizations, and look broadly to our community of practice for guidance, support and inspiration.